Mirjam Neelen & Paul A. Kirschner

In his LAK 2016 keynote in Edinburgh Paul Kirschner answered the question ‘What do the learning sciences have to do with learning analytics (LA)?’ with a firm: ‘Just about everything!’ He also noted that in most LA projects and studies, the learning scientist and learning theories are conspicuously absent, which often lead – in his words – to dystopian futures.

The trigger to write this blog was far and foremost a statement that Bart Rienties made in his keynote at EARLI17 (summary and slides here), in which he said that research shows that learning design[1] (LD) has a strong impact on learner behaviour, satisfaction, and performance. This, in itself, isn’t earth shocking for us (we’d expect effective LD; that is LD based on evidence from learning sciences, to positively impact learning and ineffective LD to harm it). However, it’s of tantamount importance that LA are used to provide (more) evidence for those relations (LD based on learning sciences and learner behaviours and performance) as well as insight in the learners’ learning processes to enable LD specialists to improve the LD based on LA.

Now, one comment before we move on. In Nguyen, Rienties, and Toetenel’s study (2017) on mixing and matching LD and LA , there’s one particular phrase that caught our attention: “Persico and Pozzi argued that the learning process should not only depend on experience, or best practices of colleagues but also pre-existing aggregated data on students’ engagement, progression, and achievement” (p 2). This is incomplete if you ask us. It should be … but also be based on evidence-informed strategies from the learning sciences and pre-existing data… and so forth. Let’s keep this in mind for the rest of this blog.

Note: This blog is by no means intended to describe the referenced studies in detail (it’s a blog and not a review article), however we’d like to discuss some interesting findings from them and add a couple of questions and critical comments that will hopefully help to make even more progress in this space.

Different ways of approaching LA

All studies discussed here (Bakharia et al., 2016, Lockyer et al., 2013, Rienties et al., 2011, Rienties et al., 2016, and Nguyen et al., 2017) were carried out in a higher education setting (as are most studies in this space) and all attempted to compare how blended and online modules were designed with how this design impacted learner behaviour and performance. Also, in all studies there was close collaboration between, what the researchers call LD specialists[2] (actually teachers) and LA experts, which we’d say is a good start (especially because the researchers work with the strategy as outlined in the LD document from the Open University). However, we would like to flag that teachers aren’t necessarily LD specialists (the latter has learning sciences knowledge and expertise).

The studies also have differences as they approach LA from two different angles. The ones that Rienties and his colleagues carried out (2015, 2016, 2017) focus on aggregating data from a large set of blended courses, trying to find patterns within that data, while, Lockyer et al., (2013) and Bakharia et al., (2016) focus on actionable analytics for teachers based on their LD as applied in a specific course.

In Bakharia and colleagues’ (2016) ‘Learning Analytics for Learning Design Conceptual Framework’, as illustrated below, the teacher plays a central role in bringing necessary contextual knowledge to the review and analysis of LA in order to support decision-making in relation to LD improvement.

Conceptual Framework: Learning analytics for learning design (Bakharia et al., 2016)

This basically means that all LA implementations are based on the teachers’ requests, as identified through interviews. Rienties and colleagues approach this differently (although they too work with teachers). In their research, teachers specified the learning outcomes for each module, reviewed all available learning materials within the modules, and classified the types of activity[3], as well as estimating the time that learners were expected to spend on each activity (workload).

In order to classify the types of activity, the teachers used a LD taxonomy[4] which identifies seven types of learning activity, as seen in the table below:

| Activity Category | Type of activity | Example |

| Assimilative | Attending to information | Read, Watch, Listen, Think about, Access. |

| Finding and handling information | Searching for and processing information | List, Analyse, Collate, Plot, Find, Discover, Access, Use, Gather. |

| Communication | Discussing module related content with at least one other person (student or tutor) | Communicate, Debate, Discuss, Argue, Share, Report, Collaborate, Present, Describe. |

| Productive | Actively constructing an artefact | Create, Build, Make, Design, Construct, Contribute, Complete,. |

| Experiential | Applying learning in a real-world setting | Practice, Apply, Mimic, Experience, Explore, Investigate,. |

| Interactive / adaptive | Applying learning in a simulated setting | Explore, Experiment, Trial, Improve, Model, Simulate. |

| Assessment | All forms of assessment (summarive, formative and self assessment) | Write, Present, Report, Demonstrate, Critique. |

Learning design taxonomy

Both approaches have advantages and disadvantages. Asking only the teacher what LA are needed (although their input is definitely required as they understand the context in which the data were collected as well as their own goals), is not the best approach. While the benefit of this approach is that the data are possibly useful and meaningful to the teacher, teachers are most often looking for ‘simple’ answers to everyday practical problems and overlook the need for certain LA for more structural problems. We can compare this to asking audiophiles in the 1970’s what they needed. Their answer would have been: lighter turntable arms, better phonograph needles, harder / more wear-resistant LP’s, etc. They would not have answered: digital devices, CDs, and so forth.

Overall, we’d say that this method raises the question what experts need to be involved at what point in the ‘LA design’ process and what this ‘co-design’ process ideally should look like. Kapros and Peirce (2014) describe an attempt of such a co-design process (with L&D Managers) that might be useful as a starting point.

What Rienties et al., (2015, 2016) do is different in the way that they’re trying to investigate overall patterns, attempting to answer the question what the impact of LD is on learner behaviour, engagement, and performance. This seems useful because, for example, it could potentially provide teachers with the opportunity to compare their own course LD with that of other teachers and learn from them through LA visualisations.

The following insights show how looking at overall patterns can be very enlightening. Now, ears wide open, please. Rienties and colleagues (2015) found that learner satisfaction wasn’t related to academic retention at all! More importantly, they add, the so-called ‘learner-centred’ LD activities that had a negative effect on learner experience had a neutral to even positive effect on academic retention. So, the learners didn’t like it but they still stuck with it. We have blogged about this before (for example here and here) and it would be nice if we could finally all accept that learning can be hard sometimes and that this difficulty, combined with making mistakes, persistence, and receiving feedback are actually critical for learning. The authors stress that a focus on learner satisfaction might actually distract institutions from understanding the impact of LD on learning.

Another great finding from the same authors is that the primary predictor of academic retention was the relative number of communication activities (in this case defined as discussing module related content with a peer or a teacher).

So, some really good stuff here and it would be marvellous if we’d see more of this type of research. We also have some questions that will hopefully contribute to make the research even better.

The elephant in the room

There’s one major question that we’d like to ask. All researchers acknowledge ‘pedagogical intent’. Bakharia and colleagues (2016) discuss this explicitly, stating that “[T]he field of learning design allows educators and educational researchers to articulate how educational contexts, learning tasks, assessment tasks and educational resources are designed to promote effective interactions between teachers and students, and students and students, to support learning” (p 331). So, again, although it’s a good idea to analyse the LD and consider the pedagogical intent, what seems to be missing in all these studies is the question why the LD specialist designed the module this way? What did they base their design decisions on? What theories or paradigms were at the foundation of their thinking and designing? We repeat: A LD specialist has studied and learned to apply theories from the learning or educational sciences and that’s quite a different area of expertise than having studied to become a teacher.

There’s one major question that we’d like to ask. All researchers acknowledge ‘pedagogical intent’. Bakharia and colleagues (2016) discuss this explicitly, stating that “[T]he field of learning design allows educators and educational researchers to articulate how educational contexts, learning tasks, assessment tasks and educational resources are designed to promote effective interactions between teachers and students, and students and students, to support learning” (p 331). So, again, although it’s a good idea to analyse the LD and consider the pedagogical intent, what seems to be missing in all these studies is the question why the LD specialist designed the module this way? What did they base their design decisions on? What theories or paradigms were at the foundation of their thinking and designing? We repeat: A LD specialist has studied and learned to apply theories from the learning or educational sciences and that’s quite a different area of expertise than having studied to become a teacher.

Ideally, the LD specialist would decide what the most effective LD is based on learning theories from the learning / educational sciences for which there is adequate empirical evidence. For example, when learners need to remember concepts or facts, they need to apply strategies such as dual coding (Clark & Paivio, 1991) and/or retrieval practice (e.g., Karpicke & Roediger, 2007). When they need to apply knowledge in various contexts, they need, for example, variable / distributed practice (Van Merriënboer & Kirschner, 2017) and feedback (e.g., Boud & Molloy, 2013 and Hattie & Timperley, 2007). So, it would be important is to understand why the LD specialist designed the module the way (s)he did. This is important in particular because, as Kirschner discussed in a previous blog, teachers’ LD probably are not based on evidence from learning sciences as this is usually not part of the teachers’ training curriculum.

In other words, the pedagogical intent alone is not sufficient to get useful LA (go back to the analogy of the audiophile: her/his intent was better sounding music, no scratches on the LP, etc.). We need to make sure that this intent is based on proven effective learning strategies or at least, make sure that we find a way to explicitly label the LD decisions somehow (e.g., relate them to the learning objectives and their concomitant evidence-informed strategies). Even the labelling as Rienties and colleagues carried out is not enough because there’s no way to determine if the activity type was the right design decision in the first place if you don’t add the context to it.

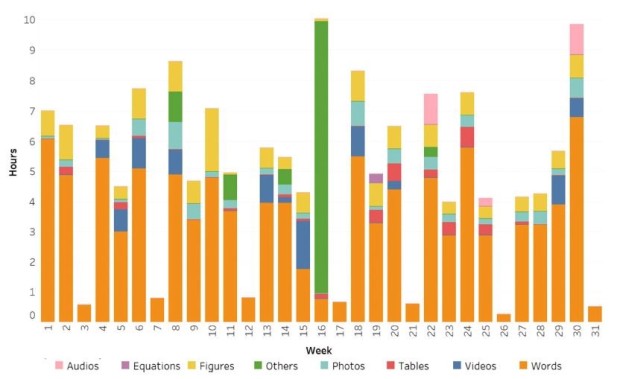

Let’s look at some examples to illustrate the point. Nguyen and colleagues (2017) found that the majority of study time was allocated to assimilative activities with six formative assessments during the learning process and a final exam at the end.

LD and learning engagement of an exemplar module in Social sciences

Yes, the teacher reviewing these analytics might know why (s)he designed it this way but maybe they do or don’t do it based on beliefs and not on evidence.

One more example. The same authors carried out a social network analysis to demonstrate the inter-relationships between different types of assimilative and other learning activities. In the image below we can see that:

- There are strong connections between the use of words with photos, tables, and figures (Hurrah for Nguyen and colleagues, who acknowledge that this finding is in line with Mayer’s multimedia principle!).

- Videos were often used in combination with finding information and productive activities. For example, learners watched a video and were then asked to answer some questions using the information from the video.[5]

Assimilative activities of an exemplar module in Social sciences

Now, we know we’re a bit stuck in a groove here (a broken record?) but the main question is, why was the learning designed the way it was designed. In other words, what theories formed the basis for the design to ensure the most effective and/or efficient approach towards achieving the desired objectives? For example, what was the purpose of using the materials (e.g., we could label the learning materials (such as videos or words) with the learning objective so that it’s clear what the learner is attempting to achieve by interacting with the materials and with the underlying theory (e.g., dual coding). We strongly argue that only if we understand the underlying reasons, LDs (who in this case are also the teachers) can make informed decisions on how to adjust their design if the data shows that learner behaviour is different than expected.

In brief, the studies as discussed here are good attempts to use LA in an effective way to support learning. Just add the insights from learning sciences and it will be even better! Otherwise you might only get a better phonograph.

References

Bakharia, A., et al., (2016). A conceptual framework linking learning design with learning analytics. In: Proceedings of the Sixth International Conference on Learning Analytics & Knowledge 2016, 329–338. ACM: New York. Retrieved from https://www.researchgate.net/publication/293171856_A_Conceptual_Framework_linking_Learning_Design_with_Learning_Analytics

Boud, D., & Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assessment & Evaluation in Higher Education, 38, 698-712. Retrieved from http://cmapsconverted.ihmc.us/rid=1P30Q5R64-R7MQKZ-394/Boud_2015.pdf

Clark, J. M., & Paivio, A. (1991). Dual coding theory and education. Educational psychology review, 3, 149-210. Retrieved from https://pdfs.semanticscholar.org/9710/56c64ab2de1c4e61dd9c4ba9fcba5d91f557.pdf

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of educational research, 77, 81-112. Retrieved from https://www.bvekennis.nl/Bibliotheek/16-0955.pdf

Kapros, E., & Peirce, N. (2014, June). Empowering L&D managers through customisation of inline learning analytics. In International Conference on Learning and Collaboration Technologies (pp. 282-291). Springer, Cham. https://www.researchgate.net/profile/Evangelos_Kapros/publication/263302861_Empowering_LD_Managers_through_Customisation_of_Inline_Learning_Analytics/links/56eaf57c08aec6b500166fcd.pdf

Karpicke, J. D., & Roediger III, H. L. (2007). Expanding retrieval practice promotes short-term retention, but equally spaced retrieval enhances long-term retention. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 704-719. Retrieved from http://memory.psych.purdue.edu/downloads/2007_Karpicke_Roediger_JEPLMC.pdf

Lockyer, L., Heathcote, E., & Dawson, S. (2013). Informing pedagogical action: Aligning learning analytics with learning design. American Behavioral Scientist, 57, 1439-1459. Retrieved from http://www.sfu.ca/~dgasevic/papers/Lockyer_abs2013.pdf

Nguyen, Q., Rienties, B., & Toetenel, L. (2017). Mixing and Matching Learning Design and Learning Analytics. In: Learning and Collaboration Technologies: Technology in Education – 4th International Conference, LCT 2017 Held as Part of HCI International 2017 Vancouver, BC, Canada, July 9–14, 2017 Proceedings, Part II (Zaphiris, Panayiotis and Ioannou, Andri eds.), Springer, pp. 302–316. Retrieved from http://oro.open.ac.uk/50450/

Rienties, B., & Toetenel, L. (2016). The impact of learning design on student behaviour, satisfaction and performance: a cross-institutional comparison across 151 modules. Computers in Human Behavior, 60, 333–341. Retrieved from http://oro.open.ac.uk/45383/

Rienties, B., Toetenel, L., & Bryan, A. (2015). “Scaling up” learning design: impact of learning design activities on LMS behaviour and performance. In: Proceedings of the Fifth International Conference on Learning Analytics and Knowledge – LAK ’15, ACM, 315–319. Retrieved from http://oro.open.ac.uk/43505/3/LAK_paper_final_23_01_15a.pdf

Van Merriënboer, J. G., & Kirschner, P. A. (2017). Ten steps to complex learning: A systematic approach to four-component instructional design (3rd edition). London, UK: Routledge.

[1] Lockyer et al., (2013) define LD as way of documenting pedagogical intent and plans, and a way of establishing the objectives and pedagogical plans, which can then be evaluated against the outcomes. The researches seem to make a distinction with instructional design (ID), which according to the Open University focuses on the specifics of designing learning materials that meet a given set of learning objectives. We would say that LD is actually both. It focuses on the desired outcome (learning) and the whole experience (structure, flow, steps, strategies) that the learner needs to get there. Instruction is only one of the possible approaches.

[2] The researchers cited use the term ‘LD specialists’ while they actually mean teacher/facilitator. They don’t describe how they were selected or how they actually define a LD specialist. From the research it seems that the LDers are also course instructors. It should be noted that none of the LD specialists in any of the studies were actually trained learning scientists (or at least, it isn’t specified that this is the case)!

[3] Of course, classifying activities can be quite subjective and it needs to be done consistently to make sure the activities can be compared across modules. There’s no way to make this process objective but it is important that all ‘mappers’ do it the same way so that at least reliability is improved and modules can be compared. In the studies, for example, the LD team held regular meetings to improve their practices and to find agreement on the mapping process.

[4] The taxonomy was developed as a result of the Jisc-sponsored Open University Learning Design Initiative.

[5] Note that all relations are correlational, there are no causal relationships.

Reblogged this on kadir kozan.

LikeLike

Thank you Mirjam and Paul for your excellent articles. I love that you provide the links to papers and research to explore further. In your studies, have you come across the step from learning to workplace performance? That is, rather than focus on assessment of learning, how can we take it one step further to measure actual performance improvement back to the business.

For example, in the ‘interactive’ part, workers can “create a model to streamline the registration process for customers buying a service” – we can measure the experiment under learning environments but what about actual business environments.

That is, can we then go one step further and say that based on that simulation, the new process reduced the number of registration bouncebacks and decreased the registration time by 75% which equates to $3.50 per registrant (or some such example).

This is the type of data that business is more interested in and how L&D may be better placed to have business support their learning programs. The above data is wonderful for L&D to understand the learning process but how can we now extend it to practical business applications. Have you come across any research in this aspect? Or research from people outside the field of Learning say in business research? This would be interesting because it’s a question that keeps coming up…

LikeLike

Hi Helen, thanks for your comments and very relevant and critical question. I think this particular blog and the research that Rienties and colleagues have conducted show how complicated it is to measure learning effectiveness. Then, your question even takes it a step further to actual ‘performance on the job’. Personally, I have worked on an xAPI state of the art report recently; the blog on that research is here: http://www.learnovatecentre.org/the-xapi-story-continues/. It doesn’t include many details though. Previously, we have posted this blog on xAPI: https://3starlearningexperiences.wordpress.com/2015/09/11/can-xapi-empower-the-learner/. You can look at other xAPI research that is trying to measure workplace performance one way or the other. HT2 labs is doing a lot of work in this space, for example here: https://www.ht2labs.com/resources/investigating-performance-design-outcomes-with-xapi/#.WecRfLpFweE. This article might be interesting, too: de Laat, M., & Schreurs, B. (2013). Visualizing Informal Professional Development Networks: Building a Case for Learning Analytics in the Workplace. American Behavioral Scientist, 57(10), 1421–1438. Sorry for bombarding you with all the resources, not sure how helpful that is. Short answer to your question is: yes, there is research out there but still quite ‘basic’ as it’s just… complex :).

LikeLike

Reblogged this on From experience to meaning….

LikeLike

Thanks Mirjam & Paul for a really thought provoking article. You’ve articulated realy clearly something that had been bugging me, but I wasn’t quite able to explain. Excellent!

I do have a follow on question:

Much of the discourse around LA, LD, and the impact of the technologies themselves on the learning seem to assume the technologies themselves are fairly neutral, after all, a video-based activity is a video-based activity, right? They seem to gloss over the dramatic difference in usage analytics between as well designed, and a poor UI / UX. (You’d assume that a poor video interface would get less traffic than a great one)

Most of my LA work is in your first domain – that of a very large, diverse group of learners who are not mediated by a single teacher, but generate a flood of data points. But when looking at the data I find it really complex to separate out impacts of the LD itself, and impacts of good, or poor interface design. So much so that we have often ended up merging the two together into a more generic “learning & engagement” measure.

Have you come across any studies, or pointers that could either strengthen this idea, or help us separate the 2 more cleanly?

LikeLike

Hi Geoff, good to see you here, I hope you’re well! Thank you for the excellent question. First, I haven’t come across any studies that are tackling what you’re describing. I think what your question shows is that there are many variables (learning design and UX design and more?) to consider when using learning analytics. I think that there are two broad approaches (A. the collecting a lot of data and then making sense of it hindsight approach and B. the ‘looking for specific things’ approach (to evaluate). In case A (your case) I’d say you need to ask the right questions when analysing the data and based upon the answers or patterns you find, you’ll need to ask follow-up questions that you’d then need to test following approach B (in your case it would be looking at UX components). I’m not sure if I’m stating the obvious for you or if you would take a different approach?

LikeLike