Mirjam Neelen & Paul A. Kirschner

There’s wide acknowledgement that learning happens in many places at random times and for many reasons. If we want to gain insight in all those different learning experiences we need a powerful, standardised and universal approach. That’s where xAPI comes in. xAPI is a specification for learning technology that allows us to track all kinds of learning activities, such as courses, mobile apps, social learning platform contributions and even offline learning experiences.

So that’s that. There’s a need to track disparate learning experiences and we have xAPI to do it. Great, right? Now, let’s not be too quick here because actually “It depends”. If we truly want to empower learners, we’re not quite there (yet?).

How xAPI works (the very basics)

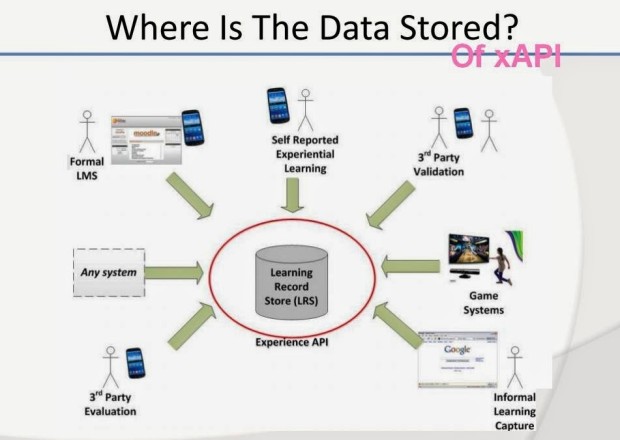

The image below shows how xAPI basically works.

<Source>

Learning experiences happen in several places (In xAPI language all the different ‘systems’ are called activity providers) and after they happen they’re captured in a Learning Record Store (LRS). The arrows in the image illustrate a statement that describes the learning activity that took place. So, basically any activity provider can send xAPI statements, which are then collectively stored in an LRS. Statements have three fundamental elements: actors, verbs, and objects. For example “Bob passed the Geography II assessment“. However, statements can also can be far richer, such as “On an iPad, while offline, Bob read an eBook about Volcanoes in Hawaii”. While the actor-verb-object structure is a critical basic, it’s usually the context of the statement that makes it meaningful (Brownlie, 2014).

This is where one of the first challenge to xAPI comes in. Someone needs to design the statements for the different activity providers. This can be as simple as designing statements of all the things that the activity provider is able to “do”, such as “<user> tagged <content X>, <user> uploaded <content Y>. However, if it’s done in this way you could end up with an overabundance of data in the LRS. Some would argue that by gathering sufficient data (i.e., statements), you would be able to identify valuable or more effective learning experiences. Unfortunately, it’s not that easy. Learning analytics is quite different from ‘general analytics’. To be able to gather meaningful learning analytics outcomes / information, we need to carefully identify beforehand what the learning intent is.

Another risk with collecting a lot of data and then analysing it to see if something interesting comes up, is that nearly all data and analysis will be correlational by nature and not causal. And though correlational analyses can sometimes be used for prediction, they’re not always able to identify how to change or influence learning or performance; that is to determine what causes learning. It’s, therefore, critical to identify what learning data one would like to track and why we want to track it before we start. In other words, we need to define a ‘model’ of the learning so as to identify what data is critical. Compare this to the oil or mining industries. They don’t just gather random data or drill / sink holes anywhere. They have geological and tectonic models and know what data they need to gather that will give them the necessary information about where the greatest probability lies of finding the natural resources they seek.

The consequence of a focus on meaningful learning data is that Learning Professionals need to learn how to design for statements. They also need to collaborate closely with data scientists because data scientists know how to work with data, but don’t necessarily know how to evaluate learning impact.

There are many case studies out there. Some focus on tracking content (e.g. Luminosity LMS) and some on actions (e.g. Torrance Learning for the Museum Project or Sean Putman’s example of a software application). The latter gives the clearest implication of an individual’s learning because users have to follow a specific process in the software and xAPI can help track if users are using the software correctly or not. For Sean Putman’s case study, it’s clear how it can support learning and empower the learner. But how would this work over time, when learning objectives change or when learning experiences add up over time?

How “learner-centric” is xAPI?

The original Advanced Distributed Learning (ADL) initiative was taken to ensure that federal employees could take full advantage of technological advances in order to “acquire the skills and learning needed to succeed in an ever-changing workplace”. Hence, xAPI was originally intended to be learner-centric. Others even take it a step further, for example Marray et al. (2013) who state that “people need ownership over their learning experiences and the data that reflects what they have learned and what they have achieved”.

Now, that’s a real interesting idea. Basically, Marray and colleagues state that individual learners need their own personal LRS so that they have insight in their own learning experiences and perhaps in what they have achieved over time. Sounds cool. However, it’s easier said than done. Collecting statements in an LRS is one thing, but the real value and challenge is in the ability to effectively and efficiently aggregate all of the statements and then to infer meaning from them in terms of what, how, when… learning takes / has taken place. This means that the LRS needs to be complemented with strong analytics and intuitive visualisations to help users (e.g., learners, L&D/HR professionals, instructors, trainers, etc) interpret the learning data. xAPI allows for flexible reporting with fine granularity and detail but with the risk of information overload if the data is not filtered accurately to ensure that only essential items are retained.

The question is: Who decides what “the essential items” are? Is it the individual learner? If so, then I’m not quite sure how that would work. It could work if there was a tool available to help set up/determine learning objectives first. If that existed, then we would need an option to map the learning experiences on to those specific learning objectives. This, however, implies a very conscious and experienced learner with strong metacognitive skills which is not exactly the profile of most learners. If others decide what data needs to be collected, for example the company / business / institute in collaboration with L&D, then this implies that the learning journey is mapped out quite formally. Again, this can work for a specific learning objective but (1) how do we ensure that the learning is meaningful not only to the business, but also to the learner and (2) how does data stay meaningful for the learner over time? …when learning objectives change? …when learning experiences aggregate from all different nooks and crannies? How do you then prevent the data from losing context? How can xAPI truly empower learners over time?

Whoever has an idea, please shoot!

References

Advanced Distributed Learning History [Online] // Advanced Distributed Learning. – 05 03 2015. – http://www.adlnet.gov/overview/.

Brownlie, R., (2014). The design of a universal ontology and Tin Can implementation for defining, tracking, sorting and visualising learner interactions across a multilingual corporate enterprise [Thesis].

Marray, K., & Silvers, A.E., (2013). A Learning Experience. Journal of Advanced Distributed Learning Technology, 1, p. 7-13.

From my experience enabling xAPI, it requires extraordinary co-ordination of effort between instructional designers, developers and leadership to get meaningful data. It’s not impossible but how often do those planets usually align in an L&D department? As I’ve recently learned, it’s not a nimble solution. If the content changes, or the objectives change, then the whole structure and taxonomy of data tracking must change. And content always changes….

LikeLike

I agree, Roger. I think that ‘change management’ is one of the main challenges in an xAPI ecosystem. Can you plan ahead? Do you need a reset point every so often? It also depends how ‘meaningful’ you want the data to be – ideally the meaning is obvious and in the context but I am not sure if that depth is possible to manage in the long run.

LikeLike