Mirjam Neelen & Paul A. Kirschner

We recently came across a great article (check it out!) from Limbu, Jarodzka, Klemke, and Specht (2018) that inspired us to write this blog. It provides a literature overview on 78 studies using sensors and AR to train apprentices, using recorded expert performance. It triggered us to explore when using sensor-based AR is beneficial to train apprentices.

First, let’s look at what apprenticeship is and what role recording expert performance plays.

The Apprenticeship Model

In the guild system in the middle ages an apprentice was a learner of a trade who was supervised by a master craftsman from a craft guild or town government. These young people were employed as an inexpensive form of labour in exchange for providing food, lodging and formal training in the craft, trade, or profession (Wikipedia). More recently, Collins, Brown, and Newman (1989) explain Jean Lave’s work from 1988 on apprenticeship, which refers to methods for carrying out tasks in a certain domain (in Lave’s case: tailoring). Apprentices learn these methods through observation of an expert, coaching by the expert, and practice in the vicinity of the expert (e.g., modelling, coaching, fading). In apprenticeships (think of an intern in a hospital or a dual student in a garage) the interplay between observing, scaffolding, and increasingly independent practicing is important for apprentices to learn to self-monitor and -correct their skills.

Of course, there can also be learning tasks that you can’t easily observe, for example when it involves solving complex problems. In this case, the focus is on cognitive and metacognitive processes and skills. Because these aren’t observable; they need to be ‘externalized’. For such tasks, Collins and colleagues have introduced the term ‘cognitive apprenticeship’, where conceptual and factual knowledge is illustrated and situated in the context of its use.

But if we want to design instruction based on an apprenticeship model, we must somehow capture expert performance to understand what ‘good’ looks like. The question is how?

How Can We Capture Expert Performance?

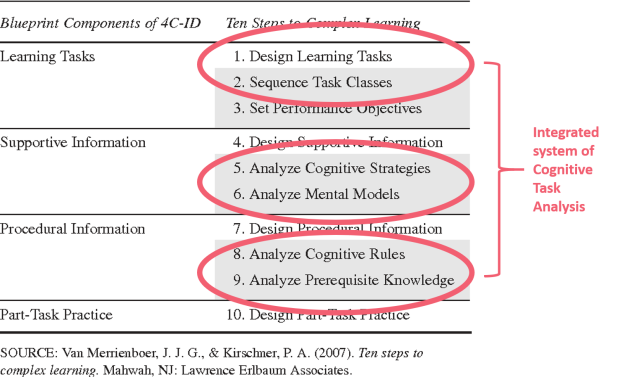

There are procedures, such as cognitive tasks analysis (CTA), for gaining insights into experts’ mental processes so that we can organise and give meaning to their observable behaviour (Van Merriënboer & Kirschner, 2018). For example, in the 4C/ID model, steps 2 and 3 (designing performance assessment and sequence learning tasks), 5 and 6 (analyse cognitive strategies and analyse mental models), and 8 and 9 (analyse cognitive rules and analyse prerequisite knowledge) make up an integrated system of CTA (see image).

There are two major limitations when it comes to CTA. The first is that it’s very time-consuming and the second is that we can’t duplicate expert performance through this process, simply because there will still be ‘things’ (considerations, steps, details) that remain invisible. After all, we’re relying on the expert telling us what they do and that brings us to the other well-known problems in ‘recording’ expert performance that mustn’t be taken lightly. One is that experts often underestimate the complexity of a task. This is normal as for them because, for them, it’s easy! They often don’t even need to think about it. Also, experts are often unaware of the huge amount of knowledge behind their performance (i.e., it’s been amassed into larger chunks or encapsulated) which leads to a third problem, namely that they often ‘skip’ steps without being aware of this (i.e., the steps have been automatized and combined). These things are so obvious and automated for them, that – for them – they go without saying. In German they call this Fingerspitzengefül; in English we might say that the knowledge has become implicit. This is problematic for apprentices because they need insight in all of the steps (i.e., they still need to learn to recognise patterns, which will allow them to eventually skip steps themselves). In other words, experts often struggle to make their knowledge explicit and to do so in a precise manner (see two of our previous blogs on expertise, here and here). This is where sensors can play a role.

Based on work by Chi et al. 1982; DeGroot; 1965; Kalyuga, Chandler & Sweller, 1998; Schneider & Shiffrin, 1997; Wilson & Cole, 1996

Based on work by Chi et al. 1982; DeGroot; 1965; Kalyuga, Chandler & Sweller, 1998; Schneider & Shiffrin, 1997; Wilson & Cole, 1996

What Role Can Sensors Play in Recording Expert Performance?

Sensors can support CTA without having to primarily rely on experts explaining what they do. Sensors have “the capability to unobtrusively measure observable properties … in representative tasks” (Limbu et al., 2018, p 2). Although Limbu and colleagues note that very few studies have claimed to actually address the cognitive process of the expert with the support of sensors, sensor data can indeed be used to do exactly that. For example, Jarodzka et al., (2013) used eye tracking data during the task analysis to explicate the cognitive process of experts to better their actions. After recording their eye movements and eye movement patterns, they confronted the experts with salient movements and patterns asking them now to verbalise what they were looking at and why. This is called cued retrospective reporting. In other words, in many cases they can make the invisible visible (in an objective manner!), which then helps a) the learning designer create a better learning environment and b) apprentices get a better understanding of the process.

Capturing performance is one thing, but what about the actual training part? Why would you go for a sensor-based AR design for training apprentices? Let’s look at some generic examples first and specific examples next.

Examples of Sensor-Based AR For Training Apprentices

Some generic examples how sensor-based AR has been used for training purposes:

- By making the invisible aspects of a task visible to apprentices, apprentices can achieve a better understanding of the process.

- By making the invisible aspects of a task visible, the instructional designer can better design a learning situation.

- Recorded expert performance data can be used to assist experts in verbalising what they have done and why they have done it. In other words, it can help them to identify the steps that have been omitted.

- Recorded expert performance data can be used to assist experts and/or learning designers by enabling them to create training materials while demonstrating the task.

- Apprentices can replay the expert’s demonstration, which includes the sensor data, in a much richer manner while doing the task at the same time.

- Expert performance data can be used for providing formative feedback by using expert performance as a benchmark.

- Sensor systems can read and log apprentices’ performance which can allow an expert to keep track of an apprentice’s progress.

Let’s move on to specific examples. The image below is from Limbu et al’s article. It nicely organises various instructional methods (they call them Instructional Design Methods), using the four components from the 4C/ID model. 4C/ID Model (Van Merriënboer & Kirschner, 2018)

4C/ID Model (Van Merriënboer & Kirschner, 2018)

They’ve mapped each method to the skills you can train with that method and they’ve given examples of what kind of sensors you can use for each of them. We’ll discuss one example for each component of the 4C/ID model.

This YouTube video shows an interesting example of an augmented mirror application in sports coaching. Sensors record the girl’s movement and display it on the mirror which helps her to correct her movements.

This is an example of 3D model augmented reality medical gown. The patient’s gown carries his/her medical history (including MRIs, X-rays, etc). All this information can be explored through the AR-enabled device (the headset that the doctor is wearing) and the affected area can be displayed on the patient’s body.

This is an example of 3D model augmented reality medical gown. The patient’s gown carries his/her medical history (including MRIs, X-rays, etc). All this information can be explored through the AR-enabled device (the headset that the doctor is wearing) and the affected area can be displayed on the patient’s body.

This is an example of haptic feedback in dentistry training (full research paper here). The AR environment allows students to practice. It combines the virtual tooth and mirror with the real-world view and the result is shown through a video see-through head mounted display. The haptic feedback is given through a probe to examine the surface of the tooth to feel its hardness, and to drill or cut it. There’s a second haptic device to control the virtual mirror.

Now, the question is, when would sensor-based AR be a good choice for training apprentices and when would it be a waste of money?

When is Sensor-Based AR the Way to Go for Training Apprentices?

Carmichael, Biddle, and Mould (2011) explore when AR is the way to go for learning. Not surprisingly, the answer is: It depends. Overall, AR has four advantages:

- Reality for free – augmented experiences can be richer and more ‘sophisticated’ because of the deliberate inclusion of real-world objects and behaviours.

- Virtual flexibility – The appearance and behaviour of digital artefacts can be altered according to the needs of the user or application and users can practice things that would otherwise be impossible, impractical or simply too dangerous (e.g., cell phone tower maintenance).

- Invisible interface – It doesn’t interfere with the ability to observe the real-world environment and you can switch attention seamlessly between real and virtual objects.

- Spatial awareness – Content can adjust as the user’s surroundings change

Carmichael and colleagues show that your design considerations depend on which cognitive theory applies to the training goal. They explore the relation between four theories and their design advantages for AR. The theories are:

- Mental models – Representations of the world people have learned and use to reason about a particular content domain.

- Distributed cognition – Takes a systems approach (not an individual one). The key is the connection between all significant features contributing to the accomplishment of a task, e.g., the environment, the network of people involved, and the artefacts used.

- Situated cognition – Brown, Collins, and Duguid (1989) proposed this theory, which argues that knowledge is inseparable from doing and that all knowledge is situated within context-specific (social, cultural, physical) activity.

- Embodied cognition – Basically, this theory says that the motor systems influences our cognition and vice versa. So, the body itself can provide or support models of interaction with the world. A person can perform bodily actions and then repeatedly consult the local environment to make sense of the world.

Within an apprenticeship model, all theories can come into play, depending on the learning task(s) at hand. Although the design considerations vary widely, depending on the theory.

Carmichael and colleagues also have created a list of general questions to check if sensor-based AR is a good idea in your context … it’s a starting point at least:

- Is there a real-world environment that the application or associated task is or should be set in?

- Is there a strong, non-arbitrary association between the virtual data and objects your application uses and some aspect of the environment?

- Is it important that details of the environment, from content to behaviour, be preserved?

- If the application supports learning a specific task, is this a non-abstract task that is already performed in the real world?

- Does the application benefit from real-world context?

Now what?

Both Limbu’s and Carmichael’s work demonstrate that there are various design considerations that come into play when considering implementing sensor-based AR solutions for apprentice training. Our recommendation would be to start with Carmichael’s questions to decide if sensor-based AR is beneficial for the specific apprentice objective. Next, if the answer is yes (or maybe), think about which theories apply to the learning goal and look if/how the advantages of AR can support that specific goal. When you’re ready to dive into design details, you can use Limbu et al’s framework to make decisions around which sensors to use for what. Of course, there are tons of other things to consider, so… Good luck!

References

Brown, J. S., Collins, A., & Duguid, P. (1989). Situated cognition and the culture of learning. Educational Researcher, 18(1), 32-42. Retrieved from http://www.dtic.mil/dtic/tr/fulltext/u2/a204690.pdf

Carmichael, G., Biddle, R., & Mould, D. (2012, October). Understanding the power of augmented reality for Learning. In E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education (pp. 1761-1771). Association for the Advancement of Computing in Education (AACE). Retrieved from http://gigl.scs.carleton.ca/sites/default/files/gail_carmichael/elearn2012.pdf

Collins, A., Brown, J. S., & Newman, S. E. (1989). Cognitive apprenticeship: Teaching the crafts of reading, writing, and mathematics. Knowing, learning, and instruction: Essays in honor of Robert Glaser, (18), 32-42. Retrieved from http://www.dtic.mil/dtic/tr/fulltext/u2/a178530.pdf

Lave, J. (1988). Cognition in Practice: Mind, mathematics, and culture in everyday life. Cambridge, UK: Cambridge University Press

Limbu, B. H., Jarodzka, H., Klemke, R., & Specht, M. (2018). Using sensors and augmented reality to train apprentices using recorded expert performance: A systematic literature review. Educational Research Review (25), 1-22. Retrieved from https://www.sciencedirect.com/science/article/pii/S1747938X18302811

Van Merriënboer, J. J., Clark, R. E., & De Croock, M. B. (2002). Blueprints for complex learning: The 4C/ID-model. Educational Technology Research and Development, 50(2), 39-61. Retrieved from https://www.researchgate.net/profile/Jeroen_J_G_Van_Merrienboer2/publication/225798787_Blueprints_for_complex_learning_The_4CID-model/links/0912f5100d35ede27a000000.pdf

Van Merriënboer, J. J., & Kirschner, P. A. (2018). Ten steps to complex learning: A systematic approach to four-component instructional design (3rd edition). New York, NY: Routledge.