Paul A. Kirschner & Mirjam Neelen

The book “Urban Myths about Learning and Education” by Pedro de Bruyckere, Paul A. Kirschner, and Casper Hulshof ends with a section on why myths in education are so pervasive and stubborn. One of the most remarkable examples was drawn from Farhad Manjoo’s book True enough: Learning to live in a post-fact society. It’s what he calls the Photoshop explanation. Self-declared experts can publish anything they want thanks to the Internet. They even have access to “recognised” platforms to share their “expertise” (for example, recently two ladies from a foodie business called “The Green Happiness” claimed on Dutch television that a chicken’s egg is basically a chicken’s menstrual period and therefore it was not healthy to eat eggs. Or take the “Food Babe” who ‘discovered’ carcinogens in your toothpaste and just about everywhere else. These are only two example of the strong and nonsensical claims they made on (un)healthy foods). These type of experts – let’s call them quacksperts seem to come crawling out of the woodwork everywhere! It seems that there’s no way to get around them; they come to us through each medium, within each niche and with sometimes contradictory stories, so that it becomes impossible for a layperson to determine what’s true and what’s not.

Quacksperts crawling out of the woodwork everywhere!

According to Manjoo the danger of living in a Photoshop era is not the ever-increasing corrupted photos, but rather that the real photos are often dismissed as being fake. In other words, when each photo, explanation, expert, or whatever else is questioned, then ALL photos, explanations, experts – you get the point – can be dismissed and anyone can choose their own expert. You’re free to choose the expert whose stories match your own beliefs or ideology. Facts no longer count; only feelings and beliefs. Just look at Donald Trump and his supporters – this clip with Newt Gingrich is a perfect example of mixing up feelings as facts and John Oliver’s reply which illustrates how dangerous that is – to see an extreme example and its likely to have disastrous consequences (this is a belief we’re sharing here although it has, we’re afraid, turned out to be fact).

This problem has been around for a while as shown by Douglas Carnine’s Why education experts resist effective practices (and what it would take to make education more like medicine), which was published in 2000. In this short report (12 pages) Carnine compares educational sciences with medical sciences. Medical scientists take scientific evidence produced by other medical scientists seriously. Educational scientists don’t. A lot of the educational research out there can even be viewed as the cause of that problem. Many studies in the educational sciences field lean on qualitative studies (and thus, generalisation is out the door) and sometimes this type of research is conducted by people who explicitly feel quite a bit of hostility towards and even disavow quantitative and statistical research methods. Even if research is crystal clear on a certain topic, for example how to best teach children how to read, some researchers knowingly ignore these results if they don’t meet their ideological preferences. According to Carnine, within education “the judgments of “experts” frequently appear to be unconstrained and sometimes altogether unaffected by objective research” (p 1). He calls this symptomatic for a field that has not evolved into a truly mature profession. In such a field, teaching methods that are proven to be ineffective are still embraced and without a doubt, fads such as learning styles can be found in scientific articles (also see Paul Kirschner’s recently published Invited Comment Stop Propagating the Learning Styles Myth in Computers & Education).

Carnine concludes his report with an excellent description of one of the best and longest running projects (Project Follow Through with more than 70,000 students in more than 180 schools). He explains that the project evaluated five different educational models (Open Education, Developmentally Appropriate Practices, Whole Language, Constructivism/Discovery Learning, and Direct Instruction). These models broadly fall into two categories; that is child-directed-construction of meaning and knowledge and those based on direct teaching of academic and cognitive skills. The shocking part is that Carnine describes how the impressive results of the study on the effects of these models (see Figure 1; copied from the report, which shows that direct instruction is head and shoulders above the other models) has just disappeared into some kind of black hole (the infamous ‘circular file’).

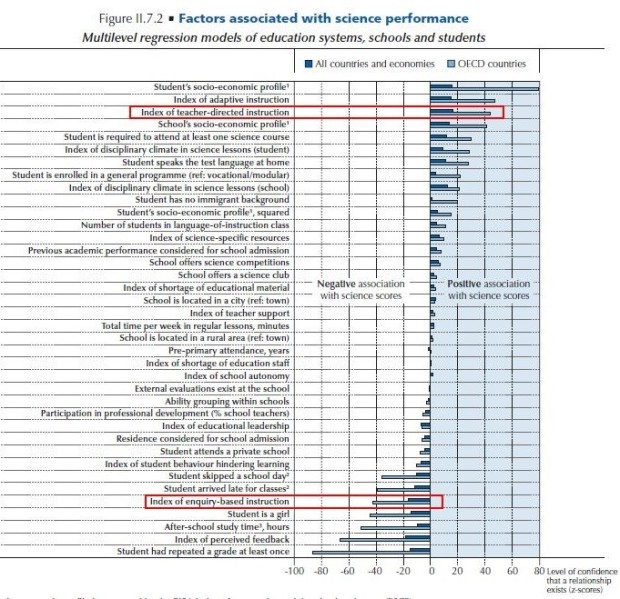

A more recent example of giving right of way to ideologies and ignoring scientific results can be found in the PISA report that was published late 2016. Figure II.7.2 (of the 27 factors associated with science performance) clearly shows the positive relationship between direct instruction and student achievement (second strongest result; box at the top of the figure) while enquiry-based instruction shows a much weaker – actually a negative – relation (box at the bottom). What this means is that

… in almost all education systems, students score higher in science when they reported that their science teachers “explain scientific ideas”, “discuss their questions” or “demonstrate an idea” more frequently. They also score higher in science in almost all school systems when they reported that their science teachers “adapt the lesson to their needs and knowledge” or “provide individual help when a student has difficulties understanding a topic or task” (p. 228).

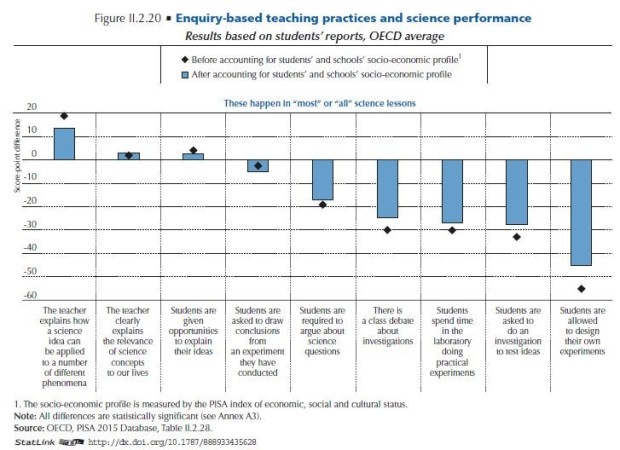

This is shown even better in the following two charts:

versus

versus

Although this distinction is very clearly shown in the figure, the PISA report is very hesitant with regards to interpreting these results. It seems as if the authors can’t bear the thought to dismiss the enquiry-learning ideology. Pathetic and unfair!

Let’s conclude with a somewhat bleak but striking quote from Carnine’s report:

Until education becomes the kind of profession that reveres evidence, we should not be surprised to find its experts dispensing unproven methods, endlessly flitting from one fad to another. The greatest victims of these fads are the very students who are most at risk (p 1).

Need we say more?

References

Carnine, D., (2000). Why Education Experts Resist Effective Practices (And What it Would Take to Make Education More Like Medicine). Retrieved from http://www.wrightslaw.com/info/teach.profession.carnine.pdf

PISA 2015 Results Polcies and Practices for Successful Schools, Volume II. Retrieved from http://www.keepeek.com/Digital-Asset-Management/oecd/education/pisa-2015-results-volume-ii_9789264267510-en#.WFgMVVOLTIU

Reblogged this on The Sausage Machine.

LikeLike

Yes, I do think you need to say more.

“These models broadly fall into two categories; that is child-directed-construction of meaning and knowledge and those based on direct teaching of academic and cognitive skills.” They *can* broadly fall into those two categories, but complex processes like teaching and learning are multi-faceted, so why choose those two?

Also, is ‘teacher-directed instruction’ the same as ‘direct instruction’? And why do you rank it second? In the table it’s ranked third of the 27 factors after ‘adaptive instruction’ which presumably ‘adapts the lesson to [the students’] needs and knowledge’. Is adaptive instruction ‘direct’?

LikeLike

Dear whomever you are,

Indeed, teaching and learning are more complex than just those two. Unfortunately, the discussion is centred on these two poles. If you read my book (Ten Steps to Complex Learning) articles and blogs, then you will notice that I discuss the whole spectrum though the basis is that the teacher/instructor (as is the case with the physician, lawyer, and so forth) is the knowledgeable one who prescribes/decides.

The second is very simple, I cannot affect socio-economic variables!

Best,

paul

LikeLike

I’m this person. https://logicalincrementalism.wordpress.com/about/

The discussion is centred on two poles because they are the two poles selected. Other facets, with other poles, are available.

I have read some of your work, but thank you for the recommendation.

LikeLike

It is the data that defines the poles. That’s why the discussion was around them. I think Figure II.2.20 shows the spectrum between the 2 poles quite well. And the data is very clear about what successful learning design in science looks like. Teachers need to be more direct in their instruction and give less autonomy to students. Logicalincrementalism, I’d be interested to see the data that reveals the other poles you suggest. Thanks

LikeLike

It’s the data *included* that define the poles. Other teaching-related factors listed in fig. II.7.2 include the index of adaptive instruction, after-school study time and the index of perceived feedback. Do those factors fall between the poles of teacher-directed and enquiry-based practices, or are they on different axes entirely? Dunno. Couldn’t find the analyses in a first-pass through the PISA report.

The post reports the PISA report as being ‘very hesitant with regards to interpreting these results’. The report expresses surprise at the results, but still publishes the data, and doesn’t suggest they are invalid or that the methodology was flawed, so I don’t see how the ‘pathetic and unfair!’ comment is justified.

What I feel is unfair is the way the PISA results are framed in the blogpost. The ‘type of experts’ who analysed the PISA data are clearly not in the same category of the ‘quacksperts’ referred to in the opening paragraph. But Paul and Mirjam appear to see them as one and the same and describe the PISA report as ‘giving right of way to ideologies and ignoring scientific results’. Clearly the report authors have not given way to ideologies and the scientific results have not been ignored because they’ve been published, the PISA report has commented on them, and the blogpost authors use the PISA data to support a view they already held.

LikeLike

I think 2 you call out do fall between the poles discussed. “Adaptive instruction” is directed instruction; “after-school study time” is self-directed. I’m sure other axes do exist, but none so simple to act on a mass scale for massive positive impact. More instruction, less self-direction.

There’s some fuzzy areas – like in-class science experiments. There’s great opportunity to learn nothing from these, setting fire to stuff, larking about, having fun. But they are engaging and can spark curiosity. There’s a lot of positive benefit in self-directed or team work to make school fun, build social skills, and take a break from boring teachers droning on. Of course a balance is required, but the data suggests a balance in favour of direct instruction.

Re. experts – it’s obvious to us what an expert looks like. But to the mass population, through mass social media they are indistinguishable. Public opinion has a great effect on public policy.

LikeLike

Reblogged this on From experience to meaning….

LikeLike

Reblogged this on kadir kozan.

LikeLike

Reblogged this on The Echo Chamber.

LikeLike

Looks like the tests are tests of “science performance.” If all I want is for kids to learn “science performance” then yes, I agree direct instruction is the best method. But I want students to learn problem solving skills, reasoning skills, application skills, etc. so does direct instruction give opportunities for learning those skills? Possibly a highly skilled teacher could combine the two. I have higher goals for students beyond “science performance.”

LikeLike

Kelly,

Two things. First, students can only learn problem-solving skills, reasoning skills, application skills, etc. if they have the (pre)requisite knowledge and second, direct instruction helps them learn those things as it is much more than lecturing (read this blog: https://3starlearningexperiences.wordpress.com/2018/05/01/direct-instruction-gets-no-respect-but-it-works/).

paul

LikeLike